A brief history

Since the early 2000s, Deep Learning began to take shape through the development of the first libraries, such as Matlab, Torch and OpenNN, which helped statisticians and mathematicians with the implementation of the first DL models. Through the years, the advance of technology and the introduction of more computing power with the use of GPUs encouraged large companies and communities to develop more powerful and comprehensive tools such as Caffe, Chainer and Theano. Decisive was the success of AlexNet, the first DL architecture to win ImageNet’s image classification competition with a gap of 10 percentage points in error rate compared to the solutions of that time.

However, a major boost in the field of artificial intelligence occurred around 2015 when Google decided to open-source its deep learning framework: Tensorflow. The tool was quickly being used in both enterprise and academic environments. Also in those years, other frameworks were released such as Caffe2, Pytorch (library built on top of Torch with Python API and developed by Facebook FAIR) CNTK by Microsoft, MXNet and Keras (developed on top of Tensorflow but with more intuitive and fast coding API).

Today, the most widely used tools are Tensorflow, Keras and Pytorch. There is no one best framework, but each of them has characteristics that make it ideal in certain cases. Here we see the pros and cons of each tool and which one is best to use in specific scenarios.

Tensorflow

Tensorflow is a framework developed by Google for building and training deep learning models. It follows the symbolic language paradigm in which a computational graph with its placeholders is defined a priori and then fed with the data within a session at runtime. It Supports both CPU and GPU computation via CUDA instructions and integrates very well with Numpy’s tensors and features. The license is free, and the tool can be used in commercial products as long as no changes are made to it. The APIs are available in Python and C++ languages. Tensorflow has become the most popular deep learning framework, allowing the building of sophisticated custom models and new DL architectures. However, it requires a certain degree of expertise, making it not recommended for beginners.

Pros:

- Computational graph abstraction

- Tensorboard interface to monitor model training

- GPU support

- Numpy-like Tensors

Cons:

- Not easy for beginner

- Slower than other frameworks

- No commercial support

Pytorch

Pytorch is a framework based on Torch, the scientific library that supports a wide range of machine learning algorithms. Pytorch replaces the Torch engine with a Python interpreter equipped with dynamic GPU acceleration. It supports the creation of complex deep learning models, starting from convolutions, sequence models, reinforcement models to transformers. Because of this, it is used a lot in research areas, for prototyping and testing new architectures: it is much easier to find pre-trained state-of-the-art models in the Pytorch format than in other formats. PyTorch is strongly backed by great documentation and community, even though is not very easy for a beginner. It is written in C++ and Python and support CUDA computing and OpenCL, for GPU acceleration.

Pros:

- Modular structure with classes like pure Python

- Easy customization: you can write your own Layer or Architecture

- Automatic GPU recognition and support

- Large community with pretrained models

Cons:

- No commercial support

- More code than other frameworks

Keras

If you ask an AI engineer which framework he started developing with, in most cases he will answer Keras. In fact, Keras is considered the friendly version of Tensorflow, consisting of a high-level open-source neural network API, written in Python and backed with Tensorflow or Theano. Its purpose is to shorten the time between the idea and the result. There are many features that make it easy to use Keras: first, the use of APIs similar to Numpy, automatic differentiation handling (backpropagation), a more Python-like approach, where the computational graph is handled behind the scenes. Keras supports GPU computation, is written in Python, and compiles models efficiently in C++.

Pros:

- Easy API implementation, even for beginners

- Supports several backends like Theano, Tensorflow, and Deeplearning4J

- Large community with pre-trained models

Cons:

- Less easy to implement your own Layer or particular architectures

- Slower than other frameworks

What about cross-compatibility?

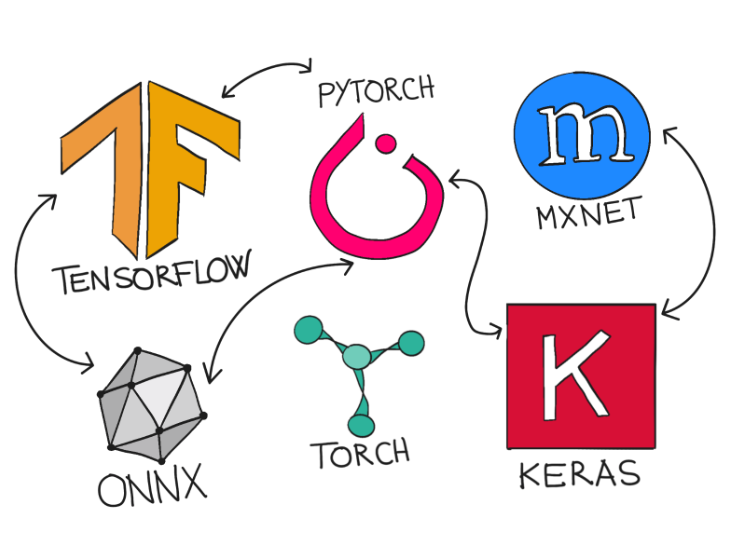

A common problem for AI engineers is compatibility between different frameworks. It happens very often that models are pre-trained using, for example, Pytorch, whereas Tensorflow is desired, either due to tool preferences or deployment requirements. To meet this need, developers from many big tech companies and the community worked together to develop a new tool: ONNX.

ONNX stands for Open Neural Network Exchange and is an open-source project. The main purpose is to provide researchers and developers with a tool to convert models between different frameworks. It represents a standard to describe any Deep Learning model, enabling its execution between different software and even hardware, regardless of programming language, framework, and execution platform. ONNX provides users with a specific runtime that allows inference execution directly on target devices, even though is mainly used as an intermediate conversion tool across frameworks.

This article has been written by Giovanni Nardini – R&D Artificial Intelligence Lead